I ran both of these urls from your site:

https://campagna.photography/galleries/nature-outdoors/single.php?id=mc-20200924-3185

https://campagna.photography/galleries/nature-outdoors/mc-20200924-3185-single.php

Both work but neither one was indexed. I believe if you find one of your URLs that is the non-dynamic version and it IS indexed by Google, you will also find the dynamic version is indexed too.

I tried a few others from you galleries and they came back as “not indexed.” The same tool reliably reports any of my pages, both the non-dynamic and dynamic page versions, are indexed.

I used: https://smallseotools.com/google-index-checker/

It is important to note that I don’t use that tool on any regular basis. I use Google Search Console. But in order to look at someone else’s property, and look at multiple domains or pages at once, I use the aforementioned tool.

In terms of blocking /backlight directory, I am sure of what I am seeing. 18 pages were tagged with “Clickable elements too close together” and 14 were tagged with “Content wider than screen” within 24 hours of my blocking it (I generate a new sitemap file every time I fiddle with the live version of my site – the generator automatically pings Google to let the bots know there is an update.) This happened within 24 hours of my blocking /backlight. As soon as I unblocked /backlight, problem went away within 24 hours. The dates in the Google report agreed with what I was seeing.

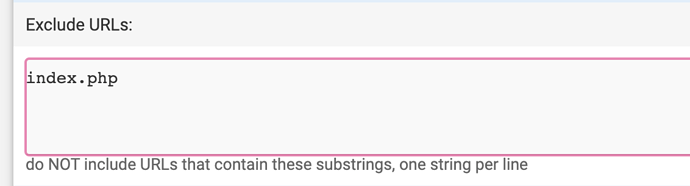

On another note: Once I blocked the ?id=, now I am getting “Valid with warnings” for 14 pages with the following explanation (I anticipate getting a lot more in due course.):

“Indexed, though blocked by robots.txt” with this explanation:

https://support.google.com/webmasters/answer/7440203#indexed_though_blocked_by_robots_txt

Bottom line: If one doesn’t want Google to index a page, it wants you to use “noindex”. This brings us back to whether a canonical fix would work as implied by Google. If I were able to change the code, based on what I’ve read on Google’s webmasters pages, I would want to try “canonical” fix first. However, if this is impossible to do from a coding standpoint, please let me know so I can move past this notion.

Perry J.